As the item of research fairly than the topic of communication, the so-called Center East has lengthy been a locus for superior applied sciences of mapping. Within the discipline of aerial imaginative and prescient, these applied sciences traditionally employed cartographic and photographic strategies. The legacy of cadastral, photographic and photogrammetric gadgets continues to affect how individuals and areas are quantified, nowhere extra so than after we take into account the all-encompassing, calculative gaze of autonomous programs of surveillance. Perpetuated and maintained by Synthetic Intelligence (AI), these remotely powered applied sciences herald an evolving period of mapping that’s more and more applied by means of the operative logic of algorithms.

Algorithmically powered fashions of knowledge extraction and picture processing have, moreover, incrementally refined neo-colonial goals: whereas colonization, by means of cartographic and different much less refined means, was involved with wealth and labour extraction, neo-colonization, whereas nonetheless pursuing such goals, is more and more preoccupied with information extraction and automatic fashions of predictive evaluation. Involving because it does the algorithmic processing of knowledge to energy machine studying and laptop imaginative and prescient, the functioning of those predictive fashions is indelibly sure up with, if not encoded by, the martial ambition to calculate, or forecast, occasions which are but to occur.

As a calculated method to representing individuals, communities and topographies, the extraction and software of knowledge is instantly associated to computational projection: ‘The emphasis on number and the instrumentality of knowledge has a strong association with cartography as mapping assigns a position to all places and objects. That position can be expressed numerically.’ If a spot or object may be expressed numerically, it bestows a privileged command on to the colonial I/eye of the cartographer. This positionality may be readily deployed to handle – regulate, govern and occupy – and comprise the current and, inevitably, the longer term.

These panoptic and projective ambitions, initially embodied within the I/eye of the singular embodied determine of the cartographer, however have to be automated if they’re to stay strategically viable. To impose a perpetual command entails the event of more and more capacious fashions of programmed notion. Established to help the aspirations of colonialism and the imperatives of neo-colonial military-industrial complexes, up to date mapping applied sciences – effected by means of the mechanized affordances of AI – extract and quantify information with the intention to venture it again onto a given surroundings. The overarching impact of those practices of computational projection is the de facto enlargement of the all-seeing neo-colonial gaze into the longer term.

The evolution of distant, disembodied applied sciences of perpetual surveillance, drawing as they did upon the historic logic and logistics of colonial cartographic strategies, additionally necessitated the transference of sight – the ocular-centric occasion of seeing and notion – to the realm of the machinic. The extractive coercions and projective compulsions of colonial energy not solely noticed the entrustment of sight to machinic fashions of notion but in addition summoned forth the inevitable automation of picture manufacturing. It’s throughout the context of Harun Farocki’s ‘operational images’, by the use of Vilém Flusser’s theorization of ‘technical images’, that we will hyperlink the colonial ambition to automate sight with the function carried out by AI within the extractive pursuits of neo-colonialism.

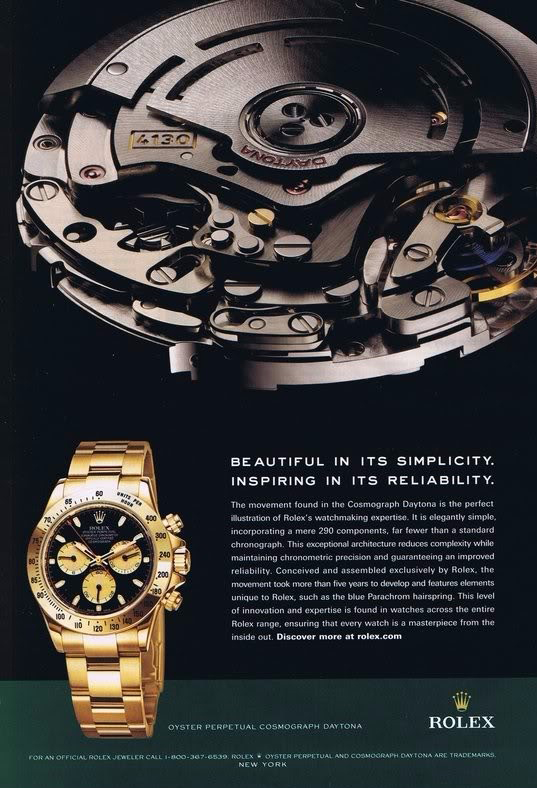

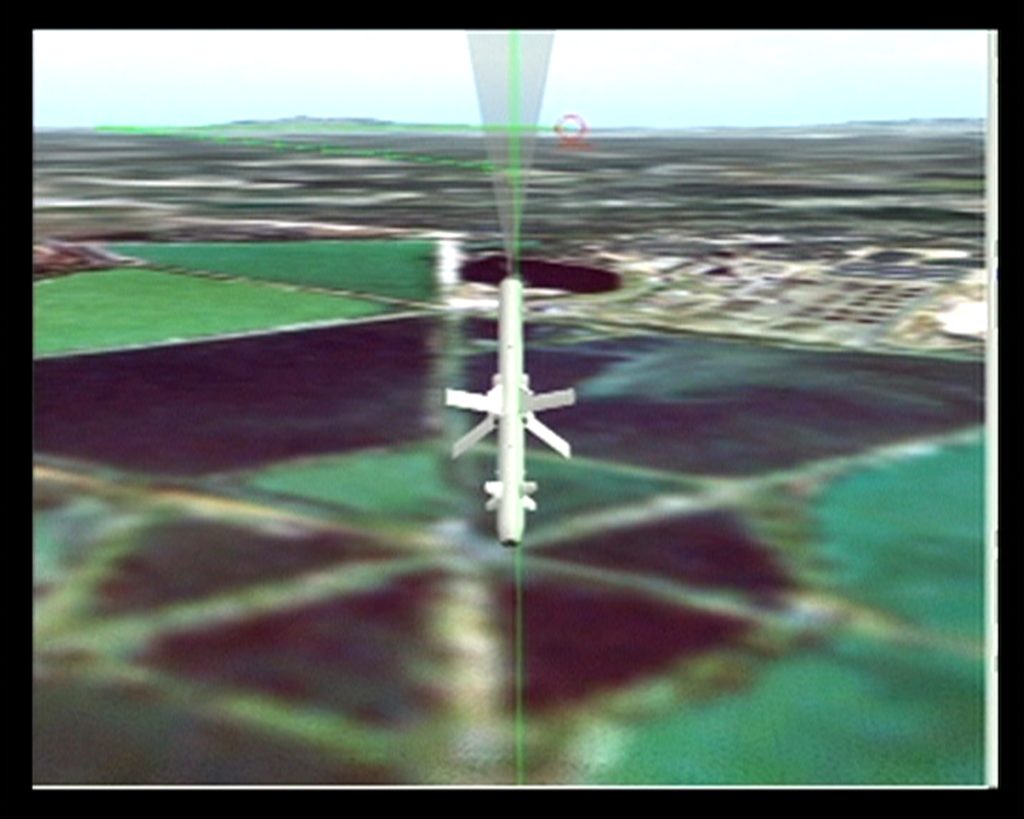

Harun Farocki, Eye Machine III, © Harun Farocki, 2003. Picture through Springerin

Primarily based as they’re on alignments inside processes of automation, mining, quantifying and archiving, ‘technical images’ and ‘operational images’ foreshadow strategies of knowledge retrieval, storage and focusing on, which at the moment are related to the algorithmic apparatuses that energy Unmanned Aerial Automobiles (UAV) and Deadly Autonomous Weapons (LAW). After we take into account the connection between ‘technical images’ and ‘operational images’, within the context of the devolution of ocular-centric fashions of imaginative and prescient and automatic picture processing, we will additionally extra readily acknowledge how the deployment of AI in UAVs and LAWs propagates an equipment of dominion that each comprises and suspends the longer term, particularly these futures that don’t serve the imperatives of neo-colonization.

Projecting ‘thingification’

Understood as a system that demonstrates non-human company, for Flusser an equipment basically ‘simulates’ thought and, through computational processes, permits fashions of automated picture manufacturing to emerge. The ‘technical image’ is, accordingly, ‘an image produced by apparatuses’ – the result of sequenced, recursive computations fairly than human-centric actions. ‘In this way,’ Flusser proposed, ‘the original terms human and apparatus are reversed, and human beings operate as a function of the apparatus.’ It’s the sense of an autonomous machinic functioning behind picture manufacturing that informs Harun Farocki’s seminal account of the ‘operational image’.

Void of aesthetic context, ‘operational images’ are a part of a machine-based operative logic and don’t, in Farocki’s phrases, ‘portray a process but are themselves part of a process.’ Indelibly outlined by the operation in query, fairly than any referential logic, these photos should not propagandistic (they don’t attempt to persuade), nor are they levelled in the direction of the ocular-centric realm of human sight (they aren’t, for instance, involved in directing our consideration). Inasmuch as they exist as summary binary code fairly than pictograms, they’re likewise not imagistic – the truth is, they aren’t even photos: ‘A computer can process pictures, but it needs no pictures to verify or falsify what it reads in the images it processes.’ Lowered to numeric code, ‘operational images’, within the type of sequenced code or vectors, stay foundational to the event of latest fashions of recursive machine studying and laptop imaginative and prescient.

Within the ultimate a part of Farocki’s Eye/Machine trilogy (2001–2003) there’s a conspicuous concentrate on the non-allegorical, recursively relayed picture – the ‘operational image’ – and its function in supporting up to date fashions of aerial focusing on. In direct reference to the primary Gulf Conflict in 1991, and the following invasions of Afghanistan and Iraq in 2001 and 2003, Farocki noticed {that a} ‘new policy on images’ had ushered in a paradigm of opaque and largely unaccountable strategies of picture manufacturing that might inexorably inform the way forward for ‘electronic warfare’.

Harun Farocki, Eye Machine III, © Harun Farocki, 2003. Picture through Springerin

The novelty of the operational photos in use in 2003 in Iraq, it has been argued, ‘lies in the fact that they were not originally intended to be seen by humans but rather were supposed to function as an interface in the context of algorithmically controlled guidance processes.’ Primarily based as they’re on numeric values, insular procedures, and a sequence of recursive directions, we will due to this fact perceive ‘operational images’ in algorithmic phrases. Moreover, the innate purposiveness of ‘operational images’ in up to date theatres of warfare and steerage programs is, regardless of the opacities concerned of their processes, repeatedly revealed of their actual world affect. The extent to which they’re deployed in surveillance applied sciences and the institution of autonomous fashions of warfare – outlined right here as ‘algorithmically controlled guidance processes’ – ensures that ‘operational images’ are used to focus on and, certainly, kill individuals.

Via finding the epistemological and precise violence that impacts communities and people who’re captured, or ‘tagged’, by autonomous programs, we will additional reveal the extent to which the legacy of colonialism informs the algorithmic logic of neo-colonial imperialism. The logistics of knowledge extraction, to not point out the violence perpetuated on account of such actions, is all too amply captured in Aimé Césaire’s succinct phrase: ‘colonisation = thingification’. Via this resonant formulation, Césaire highlights each the inherent processes of dehumanization practised by colonial powers and the way, in flip, this produced the docile and productive – that’s, passive and commodified – physique of the colonized.

As befits his time, Césaire understood these configurations primarily when it comes to wealth extraction (uncooked supplies) and the exploitation of bodily, indentured labour. Nevertheless, his thesis can also be prescient in its understanding of how colonization seeks unmitigated management over the longer term, if solely to pre-empt and extinguish components that didn’t accord with the avowed goals and priorities of imperialism: ‘I am talking about societies drained of their essence, cultures trampled underfoot, institutions undermined, lands confiscated, religions smashed, magnificent artistic creations destroyed, extraordinary possibilities wiped out.’ The exploitation of uncooked supplies, labour and folks, realized by means of the violent projections of western data and energy, employed a strategy of dehumanization that deferred, if not truncated, the quantum potentialities of future realities.

Predicting ‘unknown unknowns’

Within the context of the Center East, the administration of threat and menace prediction – the containment of the longer term – is profoundly reliant on the deployment of machine studying and laptop imaginative and prescient, a incontrovertible fact that was already obvious in 2003 when, within the lead as much as the invasion of Iraq, George W. Bush introduced that ‘if we wait for threats to fully materialize, we will have waited too long.’ Implied in Bush’s assertion, whether or not he supposed it or not, was the unstated assumption that counter-terrorism can be essentially aided by semi if not fully-autonomous weapons programs able to sustaining and supporting the army technique of anticipatory and preventative self-defence.

To foretell menace, this logic goes, you must see additional than the human eye and act faster than the human mind; to pre-empt menace you must be prepared to find out and exclude (eradicate) the ‘unknown unknowns’. Though it has been a historic mainstay of army ways, using pre-emptive, or anticipatory, self-defence – the so-called ‘Bush doctrine’ – is as we speak seen as a doubtful legacy of the assaults on the US on 11 September 2001. Regardless of the absence of any proof associated to Iraqi involvement within the occasions of 9/11, the invasion of Iraq in 2003 – to take however one significantly egregious instance – was a pre-emptive warfare waged by the US and its erstwhile allies with the intention to mitigate towards such assaults sooner or later.

Harun Farocki, Eye Machine III, © Harun Farocki, 2003. Picture through Springerin

In line with the ambition to foretell ‘unknown unknowns’, Alex Karp, the CEO of Palantir, wrote an opinion piece for The New York Occasions in July 2023. Revealed 20 years after the invasion of Iraq, and due to this fact written in a unique period, the obvious threats to US safety and the necessity for sturdy strategies of pre-emptive warfare, had been within the forefront of Karp’s considering, nowhere extra so than when he espoused the seemingly prophetic if not oracle-like capacities of AI predictive programs.

Conceding that using AI in up to date warfare must be rigorously monitored and controlled, he proposed that these concerned in overseeing such checks and balances – together with Palantir, the US authorities, the US army and different industry-wide our bodies – face a selection much like the one the world confronted within the Forties. ‘The choice we face is whether to rein in or even halt the development of the most advanced forms of artificial intelligence, which some argue may threaten or someday supersede humanity, or to allow more unfettered experimentation with a technology that has the potential to shape the international politics of this century in the way nuclear arms shaped the last one.’

Admitting that the newest variations of AI, together with the so-called Giant Language Fashions (LLMs) which have grow to be more and more widespread in machine studying, are unimaginable to know for person and programmer alike, Karp accepted that what ‘has emerged from that trillion-dimensional space is opaque and mysterious’. It will however seem that the ‘known unknowns’ of AI, the professed opacity of its operative logic (to not point out the demonstrable inclination in the direction of misguided prediction, or hallucinations), can however predict the ‘unknown unknowns’ related to the forecasting of menace, at the very least within the sphere of the predictive analytics championed by Palantir. Perceiving this quandary and asserting, with out a lot by the use of element, that it is going to be important to ‘allow more seamless collaboration between human operators and their algorithmic counterparts, to ensure that the machine remains subordinate to its creator’, Karp’s general argument is that we should not ‘shy away from building sharp tools for fear they may be turned against us’.

This abstract of the continued dilemmas within the functions of AI programs in warfare, together with the peril of machines that activate us, must be taken significantly insofar as Karp is likely one of the few individuals who can speak, in his capability because the CEO of Palantir, with an insider’s perception into their future deployment. Extensively seen because the main proponent of predictive analytics in warfare, Palantir seldom hesitates on the subject of advocating the enlargement of AI applied sciences in up to date theatres of warfare, policing, info administration and information analytics extra broadly.

In tune with its avowed ambition to see AI extra totally included into theatres of warfare, its web site is forthright on this matter. We be taught, for instance, that ‘new aviation modernization efforts extend the reach of Army intelligence, manpower and equipment to dynamically deter the threat at extended range. At Palantir, we deploy AI/ML-enabled solutions onto airborne platforms so that users can see farther, generate insights faster and react at the speed of relevance.’ As to what reacting ‘at the speed of relevance’ means we will solely surmise this has to do with the pre-emptive martial logic of autonomously anticipating and eradicating menace earlier than it turns into manifest.

Palantir’s said goal to supply predictive fashions and AI options that allow army planners to (autonomously or in any other case) ‘see farther’ will not be solely ample corroboration of its reliance on the inferential, or predictive, qualities of AI however, given its ascendant place in relation to the US authorities and the Pentagon, a transparent indication of how such neo-colonial applied sciences will decide the prosecution and outcomes of future wars within the Center East.

This ambition to ‘see farther’, already manifest in colonial applied sciences of mapping, additionally helps the neo-colonial ambition to see that which can’t be seen – or that which might solely be seen by means of the algorithmic gaze and its rationalization of future realities. As Edward Stated argues in his seminal quantity Orientalism, the operate of the imperial gaze – and colonial discourse extra broadly – was ‘to divide, deploy, schematize, tabulate, index, and record everything in sight (and out of sight)’. That is the future-oriented algorithmic ‘vision’ of a neo-colonial world order – an order maintained and supported by AI apparatuses that search to quarter, acceptable, realign, predict and document all the things in sight – and, critically, all the things out of sight.

The AI alibi

Though routinely offered as an goal ‘view from nowhere’ (a technique utilized in colonial cartography), AI-powered fashions of unmanned aerial surveillance and autonomous weapons programs – given the enthusiastic emphasis on extrapolation and prediction – are epistemic constructions that produce realities. These computational constructions, frightening as they do precise occasions on the earth, can be used to justify the occasion of actual violence. For all of the obvious viability, to not point out questionable validity, of the AI-powered picture processing fashions deployed throughout the Center East, we have to due to this fact observe the diploma to which ‘algorithms are political within the sense that they assist to make the world seem in sure methods fairly than others.

Carmel of southern Palestine, photographed between 1950 and 1977, Matson (G. Eric and Edith) {Photograph} Assortment, Library of Congress. Picture through Springerin

Talking of algorithmic politics on this sense, then, refers to the concept realities are by no means given however introduced into being and actualized in and thru algorithmic programs.’ That is to recall that colonization, as per Stated’s persuasive insights, was a ‘systematic discipline by which European culture was able to manage – and even produce – the Orient politically, socially, militarily, ideologically, scientifically, and imaginatively during the post-Enlightenment period.’ The truth that Stated’s insights have grow to be largely accepted if not typical shouldn’t distract us from the truth that the age of AI has witnessed an insidious re-inscription of the racial, ethnic and social determinism that figured all through imperial ventures and their enthusiastic help for colonialism.

Within the milieu of so-called Massive Information, machine studying, information scraping and utilized algorithms, a type of digital imperialism is being profoundly, to not point out lucratively, programmed into neo-colonial prototypes of drone reconnaissance, satellite tv for pc surveillance and autonomous types of warfare, nowhere extra so than within the Center East, a nebulous, typically politically concocted, area that has lengthy been a testing floor for Western applied sciences. In suggesting that the machinic ‘eye’, the ‘I’ related to cartographic and different strategies of mapping, has developed into an unaccountable, indifferent algorithmic gaze is to spotlight, lastly, an additional distinction: the devolution of deliberative, ocular-centric fashions of seeing and considering to the recursive realm of algorithms reveals the callous rendering of topics when it comes to their disposability or replaceability, the latter being a key characteristic – as noticed by Césaire – of colonial discourse and observe.

In mild of those computational complicities and algorithmic anxieties, the indifferent apparatuses of neo-colonization, we’d need to ask whether or not there’s a correlation between automation and the disavowal of culpability: does the deferral of notion, and the decision-making processes we affiliate with the ocular-centric area of human imaginative and prescient, to autonomous apparatuses assure that we correspondingly reject authorized, political and particular person accountability for the functions of AI? Has AI, in sum, grow to be an alibi – a way to disavow particular person, martial and governmental legal responsibility on the subject of algorithmic determinations of life and, certainly, loss of life?